AI in Project Management: Automating GDPR Compliance with Large Language Models

In today’s digital era, data is a critical asset for businesses, driving strategic decisions and operational efficiency. As its importance grows, so does the need for regulation and protection. The General Data Protection Regulation (GDPR), enforced on May 25, 2018, replaced the 1995 Data Protection Directive to enhance data privacy, security, and transparency across the EU. For project managers overseeing data-driven projects, ensuring GDPR compliance in Data Management Plans (DMPs) is a key responsibility.

However, as privacy policies expand, compliance evaluation becomes increasingly time-consuming and resource-intensive, diverting valuable project resources. Manually reviewing DMPs requires significant effort, adding to operational costs and slowing down project execution. Leveraging LLMs can streamline compliance assessments, reducing manual workload while ensuring accuracy. The goal is to explore the potential of LLMs in compliance evaluation, examining whether AI-driven system can perform evaluations on Data Management Plans, reduce effort, and improve efficiency in assessing regulatory adherence. To gain practical insights into AI-driven compliance evaluation, the experiments compare GPT-4o and Mistral—two state-of-the-art language models developed in the U.S. and Europe, respectively. The primary goal of this comparison is to determine whether the performance of an EU-based solution (Mistral) is comparable to a U.S.-based model (GPT-4o)

AI Compliance Assistant

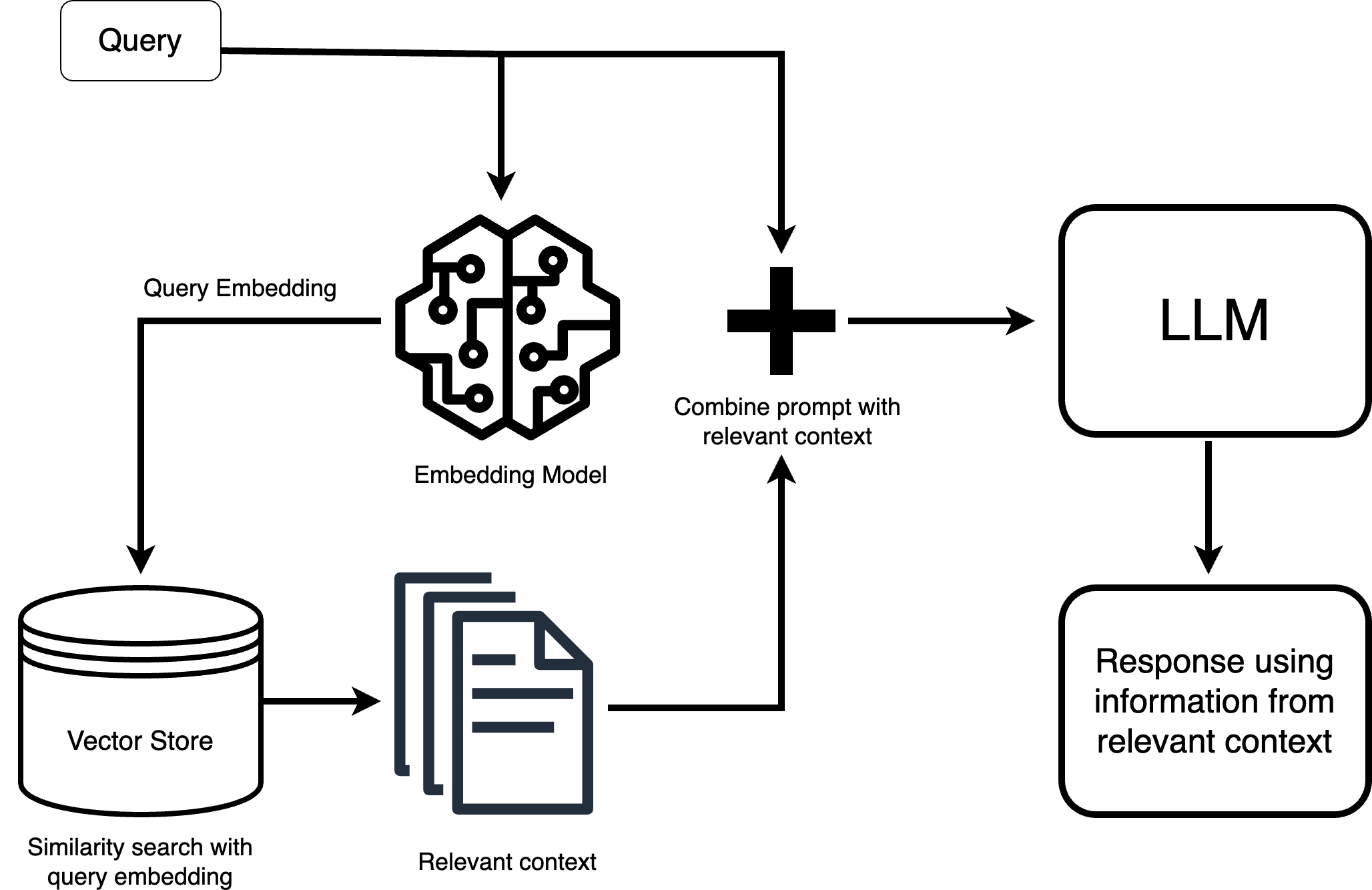

To explore the feasibility of LLMs in compliance evaluation, an AI Compliance Assistant prototype was developed to automate GDPR compliance assessments and compare its performance to human evaluations. The system is built using LangChain Framework for AI-powered Application Development and AstraDB for vector-based data retrieval.

Experimental Setup

To assess the performance of LLMs in compliance evaluation, three different approaches were applied:

- Zero-Shot Prompting – The models received general instructions without specific examples, with the goal of assessing the model’s pre-existing knowledge on GDPR compliance and establishing a baseline for further evaluations.

- Role-Setup – Additional guidelines and compliance-specific role were introduced to narrow down the evaluation scope and observe how the model adjusts its classification decisions when provided with more structured instructions.

- RAG with Checklist Integration – A Retrieval-Augmented Generation approach was applied, combining AI-driven responses with a predefined GDPR compliance checklist.

Evaluation

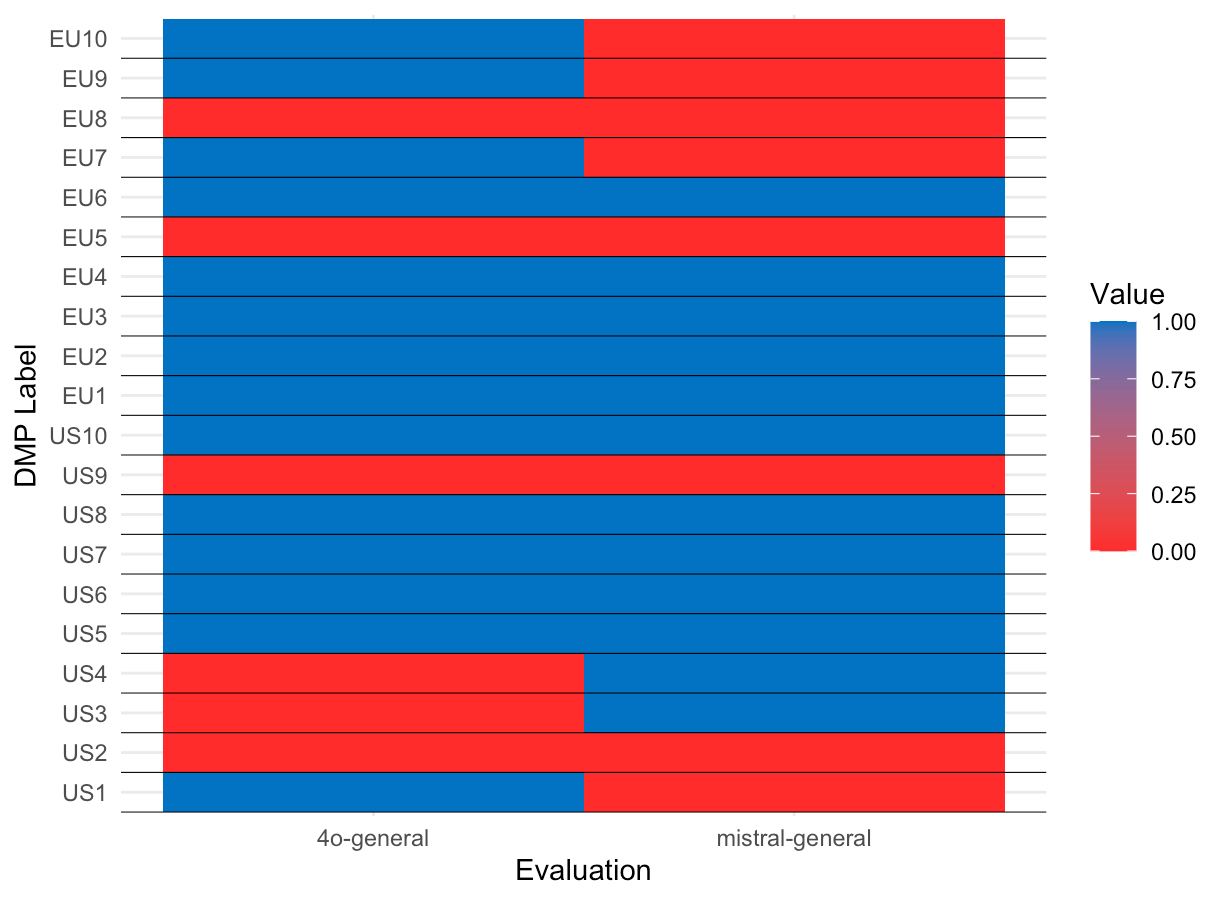

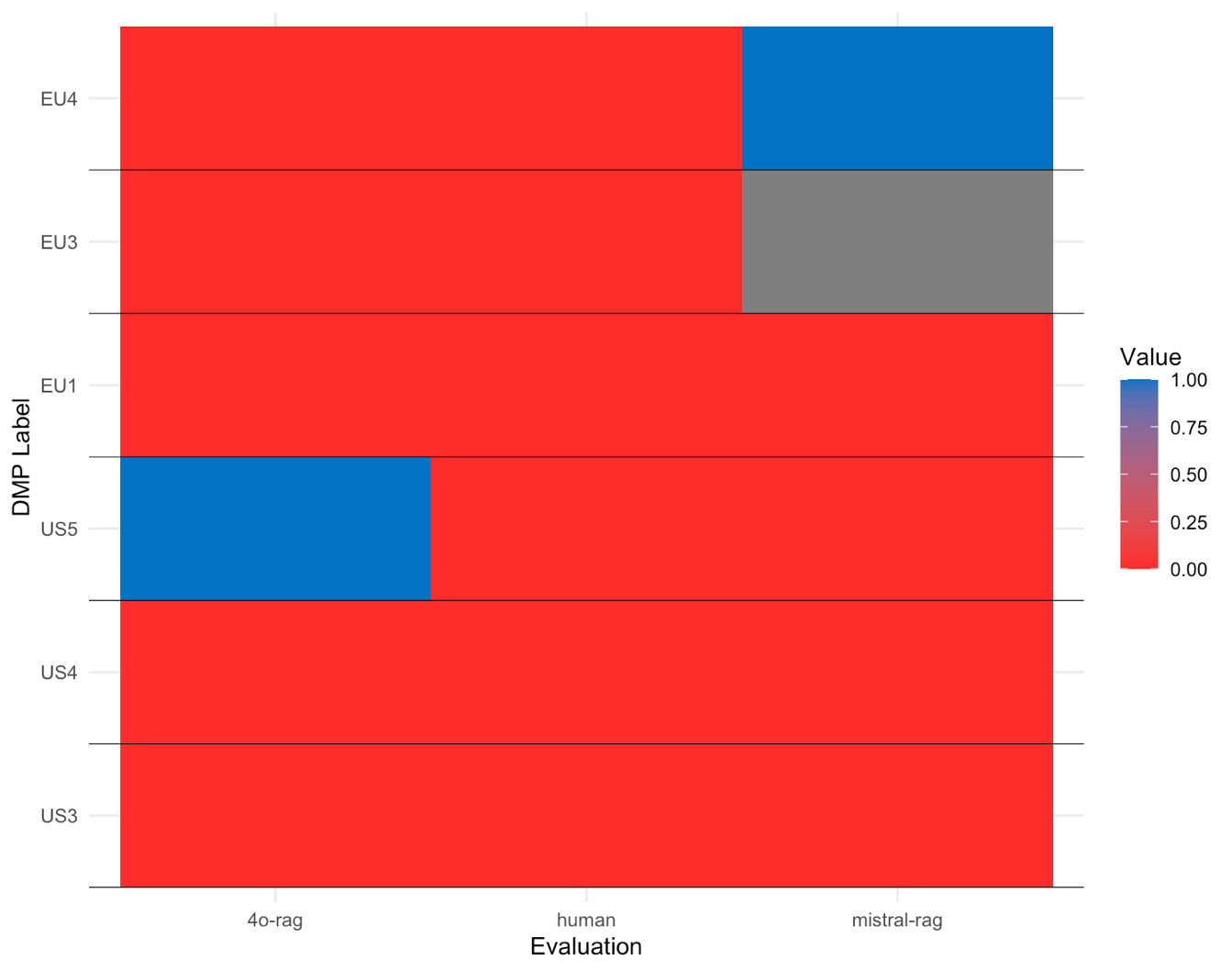

The heatmap below visualizes how the AI models evaluated compliance for different DMPs. Each row represents a DMP, labeled US1–US10 for U.S.-based plans and EU1–EU10 for European plans. The columns indicate whether the model classified each plan as compliant or non-compliant. (red = non-compliant)

Zero-Shot Prompting

Details

GPT-4o (4o-general) model identified 14 compliant DMPs out of 20 evaluations, demonstrating a more lenient or broader interpretation of compliance criteria.

Mistral (mistral-general) model identified 12 compliant DMPs, indicating a stricter or more critical assessment approach.

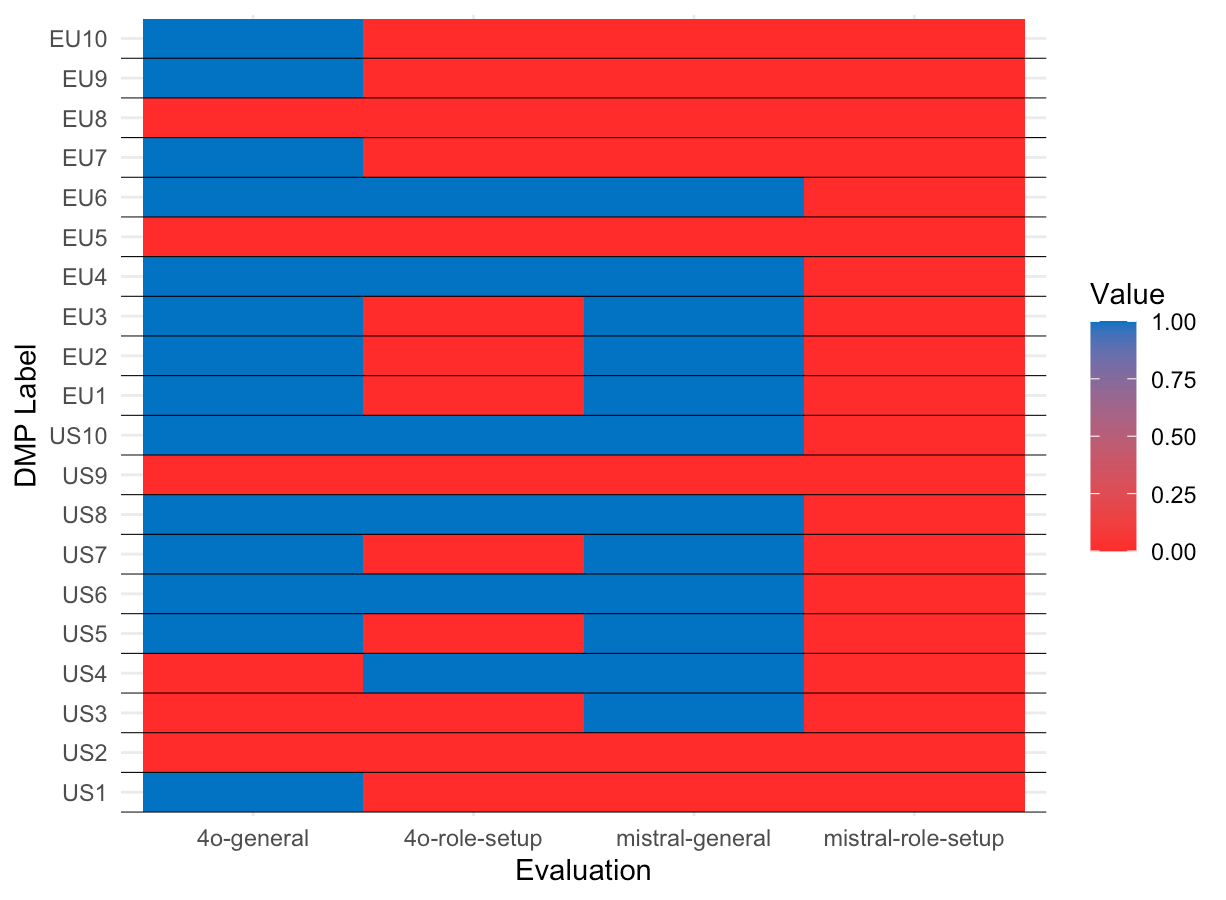

Role-Setup

Details

With the introduction of role-specific guidelines, the models demonstrated improved response depth and structure, refining their approach to compliance evaluation.

- GPT-4o (4o-role-setup) identified 6 out of 20 DMPs as compliant, indicating a stricter evaluation compared to the zero-shot approach, where it classified 14 as compliant.

- Mistral(mistral-role-setup) classified none of the DMPs as compliant, suggesting an even more critical assessment approach, rejecting all cases under the structured guideline conditions.

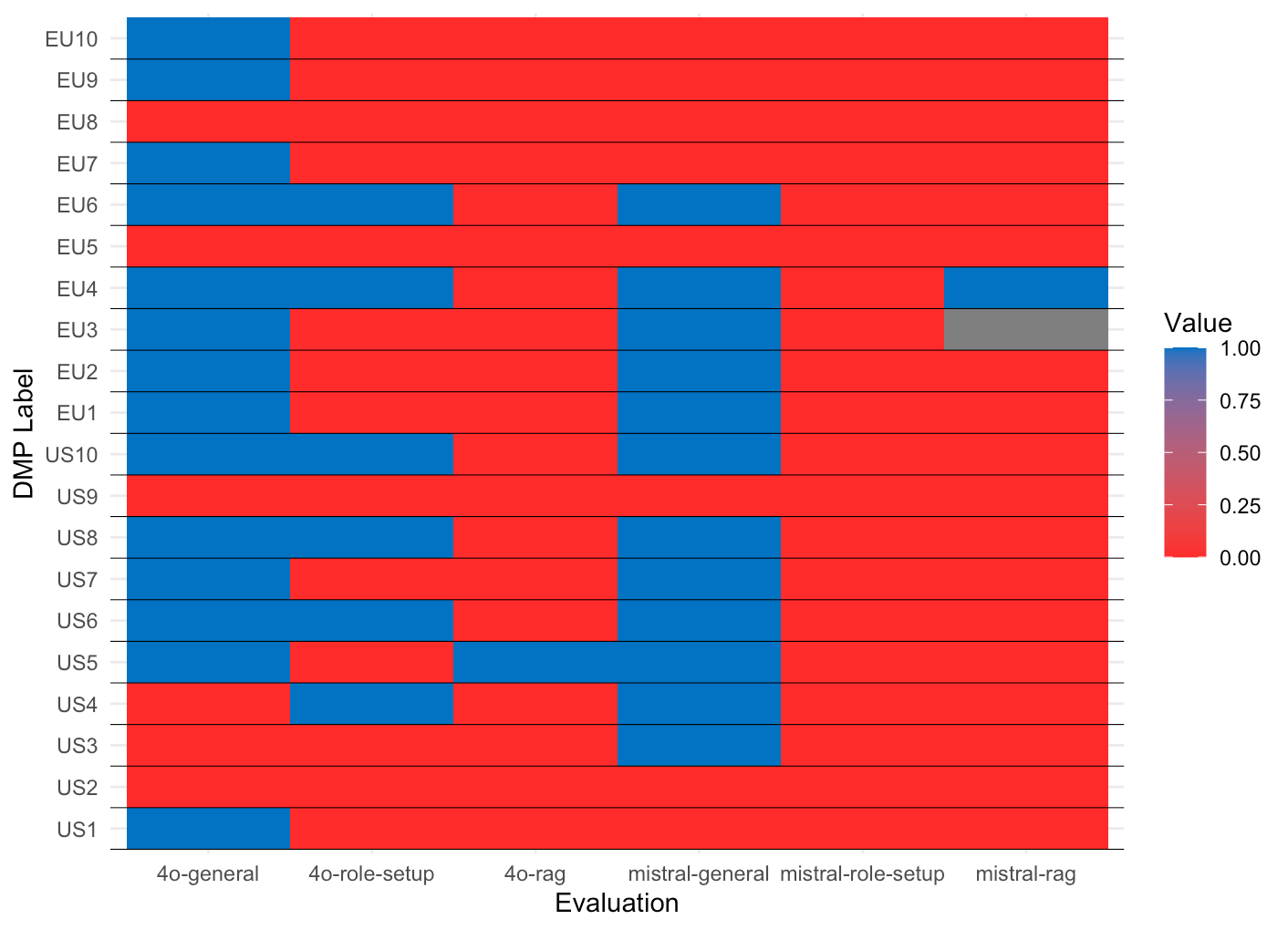

RAG

Details

The models demonstrated a more structured and rigorous compliance assessment, refining their decision-making process.

- GPT-4o (4o-rag) identified 1 out of 20 DMPs as compliant, showing a significant reduction in compliance classifications compared to previous setups.

- Mistral (mistral-rag) also classified only 1 out of 20 DMPs as compliant, further emphasizing a highly critical evaluation approach under the checklist-based structure.

- For EU3, Mistral (mistral-rag) struggled to retrieve context and returned a database (DB) error, indicating potential challenges in accessing or integrating external knowledge.

Human Evaluation of 3 US and 3 EU DMPs compared

Details

- GPT-4o (4o-rag) classified only 1 DMP (US5) as compliant, while marking all others as non-compliant, maintaining a strict assessment standard.

- Human reviewers classified all DMPs as non-compliant, reinforcing the higher threshold for compliance when evaluated manually and providing a benchmark for comparison.

- Mistral (mistral-rag) marked EU4 as compliant but struggled to retrieve context for EU3.

Conclusion

The findings highlight key trade-offs between usability, accuracy, speed, and cost, shaping the feasibility of AI-driven compliance assessments:

- LLMs can support GDPR compliance assessments, but their effectiveness depends heavily on well-structured instructions.

- RAG with checklist integration improved structure and consistency, ensuring stricter compliance evaluations.

- GPT-4o outperformed Mistral in accuracy (83% vs. 66%) and speed (26% faster), but at a higher cost.

- Mistral proved to be 31% more cost-efficient, making it a viable alternative, but struggled with usability issues, including retrieval errors.

- EU-based models like Mistral face scalability challenges, highlighting the need for further optimization in open-source regulatory AI solutions.

Overall, while LLMs demonstrate promising capabilities in automating compliance evaluations, their reliability is contingent on structured guidance and integration with external regulatory knowledge.